Chance News 93: Difference between revisions

No edit summary |

|||

| Line 108: | Line 108: | ||

<div align=right>Patrick Perlman (student), [http://www.significancemagazine.org/details/magazine/4370961/When-will-we-see-people-of-negative-height.html "When will we see people of negative height?"]</div> | <div align=right>Patrick Perlman (student), [http://www.significancemagazine.org/details/magazine/4370961/When-will-we-see-people-of-negative-height.html "When will we see people of negative height?"]</div> | ||

Submitted by Margaret Cibes | Submitted by Margaret Cibes | ||

==The gold (acre) standard, fool's gold== | |||

:''In scenes that could have come straight from a spy farce, the French journal Prescrire applied to Europe’s drug regulator for information on the diet drug rimonabant. The regulator sent back 68 pages in which virtually every sentence was blacked out.' | |||

<div align=right>--[http://www.economist.com/node/21563689 Review of Bad Pharma in The Economist]</div> | |||

The gold standard in medical research is the randomized clinical trial, RCT. Unfortunately, gold has not been doing particularly well lately in the stock market and if you read Ben Goldacre’s book, Bad Pharma: How Drug Companies Mislead Doctors and Harm Patients, neither have randomized clinical trials. Goldacre is an MD and an epidemiologist as well as a terrific writer and lecturer. Bad Pharma is the most depressing book imaginable for statisticians to read because he shows how RCTs can be manipulated and cause amazing, costly damage to society. | |||

The book is over 400 pages of carefully annotated unrelenting doom. For example, on page x of the Introduction; | |||

<blockquote> | |||

Drugs are tested by the people who manufacture them, in poorly designed trials, on hopelessly small numbers of weird, unrepresentative patients, and analysed using techniques which are flawed by design, in such a way that they exaggerate the benefits of treatment. Unsurprisingly, these trials tend to produce results that favour the manufacturer. When trials throw up results that companies don’t like, they are perfectly entitled to hide them from doctors and patients, so we only ever see a distorted picture of any drug’s true effects. | |||

</blockquote> | |||

MDs | |||

<blockquote> | |||

can be in the pay of drug companies--often undisclosed--and the journals are too. And so are the patient groups. And finally, academic papers, which everyone thinks of as objective, are often covertly planned and written by people who work directly for the companies without disclosures. Sometimes whole academic journals are even owned outright by one drug company. | |||

</blockquote> | |||

All that and we are only at page xi in the Introduction! The rest of the 400 or so pages are devoted to backing up his incendiary introductory assertions. Fascinating reading, all of it, but a short synopsis may be found [http://www.csicop.org/specialarticles/show/bad_pharma_interview_with_ben_goldacre/ here] and a marvelous and witty TED talk by Goldacre [http://www.ted.com/talks/ben_goldacre_what_doctors_don_t_know_about_the_drugs_they_prescribe.html here]. An earlier and equally amusing TED talk by Goldacre about similar subject matter may be found [http://www.youtube.com/watch?v=h4MhbkWJzKk here]. | |||

===Discussion=== | |||

1. His Chapter 4 is entitled, “Bad Trials” and contains over 50 pages. The subheadings are | |||

*Outright fraud | |||

*Test your treatment in freakishly perfect ‘ideal’ patients | |||

*Test your drug against something useless | |||

*Trials that are too short | |||

*Trials that stop early | |||

*Trials that stop late | |||

*Trials that are too small | |||

*Trials that measure uninformative outcomes | |||

*Trials that bundle their outcomes together in odd ways | |||

*Trials that ignore drop-outs | |||

*Trials that change their main outcome after they’ve finished | |||

*Dodgy subgroup analyses | |||

*Dodgy subgroups of trials, rather than patients | |||

*‘Seeding Trials’ | |||

*Pretend it’s all positive regardless | |||

Each subheading is illustrated with actual drug trials. Any one of those “Bad Trials” has frightening consequences. Pick a trial mentioned in the book and present Goldacre’s evidence. | |||

2. He devotes 100 pages to the topic “Missing Data.” Because Tamiflu is considered at length in this chapter, compare and contrast what Goldacre writes about Tamiflu with what appears in [http://en.wikipedia.org/wiki/Oseltamivir Wikipedia about oseltamivir]. | |||

3. Another 100 pages in the book is covered under “Marketing.” In particular, note the role played by KOLs, “key opinion leaders.” | |||

4. Goldacre’s last chapter is entitled, “Afterword: Better Data” where he pleads for “full disclosure: | |||

<blockquote> | |||

We now know that this entire evidence base has been systematically distorted by the pharmaceutical industry, which has deliberately and selectively withheld the results of trials whose results it didn’t like, while publishing the ones with good results…It is not enough for companies to say that they won’t withhold data from new trials starting from now: we need the data from older trials, which they are still holding back, about the drugs we are using every day. | |||

</blockquote> | |||

To the workers in the pharmaceutical industry, “I also encourage you to become a whistleblower” and “If you need any help, just ask: I will do everything I can to assist you.” Given the recent experience of whistleblowers in government employ, how realistic is Goldacre’s plea? | |||

5. A typical RCT has a placebo arm to be compared to the treatment arm. According to | |||

[http://asserttrue.blogspot.com/2013/03/placebos-are-becoming-more-effective.html Kas Thomas] | |||

<blockquote> | |||

The problem of rising placebo response is seen as a crisis in the pharma industry, where psych meds are runaway best-sellers, because it impedes approval of new drugs. Huge amounts of money are on the line. | |||

</blockquote> | |||

Thomas further [http://asserttrue.blogspot.co.nz/2013/03/new-patents-aim-to-reduce-placebo-effect.html asserts] | |||

<blockquote> | |||

The drug companies say these failures [of the drugs to outperform the placebos] are happening not because their drugs are ineffective, but because placebos have recently become more effective in clinical trials. | |||

</blockquote> | |||

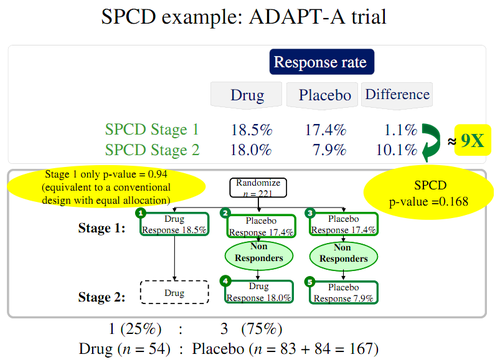

In order to mitigate the effectiveness of placebos, drug manufacturers have devised SPCD, The Sequential Parallel Comparison Design: | |||

<blockquote> | |||

SPCD is a cascading (multi-phase) protocol design. In the canonical two-phase version, you start with a larger-than-usual group of placebo subjects relative to non-placebo subjects. In phase one, you run the trial as usual, but at the end, placebo non-responders are randomized into a second phase of the study (which, like the first phase, uses a placebo control arm and a study arm). | |||

</blockquote> | |||

Below is an illustration of how SPCD works and its possible benefits: | |||

<center> [[File:PlaceboSPCDProtocol.png | 500px]]</center> | |||

Thomas is very suspicious of the procedure: | |||

<blockquote> | |||

This bit of chicanery (I don't know what else to call it) seems pointless until you do the math…basically, if you optimize the ratio of placebo to study-arm subjects properly, you end up increasing the overall power of the study while keeping placebo response minimized. This translates to big bucks for pharma companies, who strive mightily to keep the cost of drug trials down by enrolling only as many subjects as might be needed to give the study the desired power. In other words, maximizing study power per enrollee is key. And SPCD does that. | |||

<br><br> | |||

The whole idea that placebo effect is getting in the way of producing meaningful results is repugnant, I think, to anyone with scientific training. | |||

What's even more repugnant, however, is that…[it] didn't stop with a mere paper in Psychotherapy and Psychosomatics…[the creators of SPCD] …went on to apply for, and obtain, U.S. patents on SPCD (on behalf of The General Hospital Corporation of Boston). The relevant U.S. patent numbers are 7,647,235; 7,840,419; 7,983,936; 8,145,504; 8,145,505, and 8,219,41, the most recent of which was granted July 2012. You can look them up on Google Patents. | |||

<br><br> | |||

The patents begin with the statement: "A method and system for performing a clinical trial having a reduced placebo effect is disclosed." Incredibly, the whole point of the invention is to mitigate (if not actually defeat) the placebo effect. I don't know if anybody else sees this as disturbing. To me it's repulsive. | |||

</blockquote> | |||

The well-known statistician, Stephen Senn, made several comments at Thomas’ blog: | |||

<blockquote> | |||

The statisticians involved, at least, ought to know better. 1.They are assuming that placebo response is genuine and not just regression to the mean (the latter is usually grossly underestimated). 2. they are assuming that it is reproducible and not transient. 3. they are assuming that the FDA is asleep. If the Agency is not dozing they will make them market the drug as being only suitable for those who have a proven inability to respond to placebo. That should sort them out. | |||

<br><br> | |||

[I]f you take the point of view that the practical question is to see how a drug improves the situation beyond what would normally happen then it seems appropriate to have as a control what would normally happen. What is labelled placebo response is in any case in most cases just regression to the mean. I regard all this placebo response stuff as just so much junk science. | |||

</blockquote> | |||

In Stage 1 of the above figure for the ADAPT-A trial, the drug had 10 successes in 54 trials (18.5 %) while the placebo had 29 successes in 167 trials (17.4%). Ignoring the Stage 2 entirely, what do these numbers indicate about rejecting the null hypothesis (drug is equivalent to placebo)? | |||

6. One possible easy way to make the placebo less effective is to tell the subject that he is receiving a worthless sugar pill rather than real medication. Why is this inexpensive, straightforward procedure not utilized? | |||

Submitted by Paul Alper | |||

Revision as of 12:43, 9 May 2013

Quotations

“The magic of statistics cannot create information when there is none.”

Submitted by Margaret Cibes

He [Stapel] viewed himself as giving his audience what they craved: 'structure, simplicity, a beautiful story.' Stapel glossed over experimental details, projecting the air of a thinker who has no patience for methods. The tone of his talks, he said, was 'Let’s not talk about the plumbing, the nuts and bolts — that’s for plumbers, for statisticians.'"

[Stapel is the disgraced Dutch academic who faked his data to show statistical significance].

Submitted by Paul Alper

"Statistics is a subject that many medics find easy but most statisticians find difficult."

Submitted by Paul Alper

Forsooth

Ignorance is bliss, or is it?

“I remember a few years ago looking at peer rankings of graduate departments and being delighted to discover that among statistics departments my own alma mater was in the top ten (although my trust in the process was tempered by the knowledge that, despite its lofty ranking, Princeton’s statistics department had been disbanded decades earlier.)”

“As they do on many obscure policy issues, Americans polarize sharply along partisan lines when they learn that President Barack Obama supports a repeal of the 1975 Public Affairs Act. …. There's one striking problem here: The 1975 Public Affairs Act does not exist. …. In a series of surveys that polled national samples about similarly fictitious or otherwise unknown legislation, [pollsters] found 20 to 40 percent of Americans were willing to offer opinions on laws they have never heard of.”

“Most uses of the classical tools of statistics have been, are, and will be, made by those who know not what they do.”

Submitted by Margaret Cibes

Justice flunks math

Thanks to Bob Griffin, who sent a link the following op/ed:

Justice flunks math, by Liela Schneps and Caolie Colmez, New York Times, 26 March 2013

This op/ed piece is a response to the recent developments in the case of Amanda Knox (Italy’s highest court overturns acquittal of Amanda Knox, NYT, 26 March 2013). In 2009, Knox and her ex-boyfriend were convicted in Italy for the murder of Knox's roommate. The twists and turns in her prosecution have been widely documented in the media. In 2011, an appeals court overturned the convictions. But now the Court of Cassation has ordered that the case be retried.

According to Schneps and Colmez, "The Court of Cassation has not yet publicly explained the motivations behind its ruling. But the appellate judge’s failure to understand probability may well play a role." A judge has refused to order a retest of a DNA sample, recovered in 2007 from a knife in Knox's apartment, that had been tentatively linked to the victim. They continue:

Whatever concerns the judge might have had regarding the reliability of DNA tests, he demonstrated a clear mathematical fallacy: assuming that repeating the test could tell us nothing about the reliability of the original results.

Imagine, for example, that you toss a coin and it lands on heads 8 or 9 times out of 10. You might suspect that the coin is biased. Now, suppose you then toss it another 10 times and again get 8 or 9 heads. Wouldn’t that add a lot to your conviction that something’s wrong with the coin? It should.

Schneps and Colmez have collaborated on a recent book Math on Trial: How Numbers Get Used and Abused in the Courtroom, which explores misapplications of statistics in criminal trials.

Correlation vs. causation tales

Here are two new recent stories that show that the need for constant vigilance in explaining that association is not causation.

Double majors produce dynamic thinkers, study finds.

by Dan Berrett, Chronicle of Higher Education, 15 March 2013

Jeff Witmer sent this article to the Isolated Statisticians list, and also this link to the original study.

The Chronicle site requires a subscription, but Jeff provided the two revealing quotations that appear below. First the description of the findings:

- "Students who major in two fields are more apt than their single-majoring peers to think both integratively and creatively, according to a new study."

Of course, we should ask ourselves whether the increase in creativity was a result of double-majoring, or whether integrative thinkers are more likely to choose double-majors. As Jeff points out, this point was apparently lost on the Chronicle, which ironically followed up with this statement.

- "While the rate of double majors at the nation's colleges is 9 percent, it was 19 percent in the researchers' sample, reflecting the generally high caliber of students at elite institutions."

In another recent example, Paul Alper sent a link to these the following (and also a response from Andrew Gelman's blog):

Researchers finally replicated Reinhart-Rogoff, and there are serious problems

by Mike Konczal, Next New Deal blog, 16 April 2013

Reinhart and Rogoff are economists whose writing about the relationship between economic growth and government debt have been frequently cited in the discussion over the US national debt (here is a link from the Wall Street Journal to the 2010 Reinhart-Rogoff paper, Growth in a time of debt). In his blog post, Konczal quotes Paul Ryan's Path to Prosperity budget as saying that Reinhart-Rogoff "found conclusive empirical evidence that [debt] exceeding 90 percent of the economy has a significant negative effect on economic growth."

Not surprisingly, deficit hawks argue that increased spending has led to excessive debt, causing slower growth. But, as with the previous story, we should be asking whether the causality could go the other way: perhaps slower growth has driven up the debt by reducing government revenues. Probably debt and growth interact in a more complicated way than either of these simple explanations suggest. The point again is not to seize on an observed association as definitive proof for one's favored theory.

Now even more serious trouble has emerged. Other researchers trying to reproduce the analysis uncovered errors in the spreadsheet model Reinhart and Rogoff used to code their data. The mistake had the effect of excluding some high-debt countries that did not experience subpar growth (see The Excel depression by Paul Krugman, New York Times, 18 April 2013). There also appeared to be some selective omissions of high-debt years that did not fit the theory. When these problems are corrected, the famous 90 percent figure no longer stands out as a critical turning point. But of course this was the feature that drew so much attention to the model in the first place.

Paul Alper sent a link to Andrew Gelman's blogpost Memo to Reinhart and Rogoff: I think it’s best to admit your errors and go on from there (16 April 2013).

Submitted by Bill Peterson

Randomly funny

“These Amazon Products Are No Joke, But the Online Reviews Are”

The Wall Street Journal, April 30, 2013

Apparently there are thousands of people submitting satirical reviews of books and other products to Amazon’s website.

This article’s opening focus was on reviews of RAND’s 600-page, 60-year-old paperback A Million Random Digits With 100,000 Normal Deviates. (See “A Million Random Digits” for a pdf or e-copy of the book, as well as RAND’s motivation for preparing the book.)

Here are two creative reviews:

“Almost perfect …. But with so many terrific random digits, it's a shame they didn't sort them, to make it easier to find the one you're looking for.”

“[T]he first thing I thought to myself after reading chapter one was, ‘Look out, Harry Potter!’”

The article also reported:

One Amazon reviewer panned a real-life copycat publication called "A Million Random Digits THE SEQUEL: with Perfectly Uniform Distribution." "Let's be honest, 4735942 is just a rehashed version of 64004382, and 32563233 is really nothing more than 97132654 with an accent."

And Amazon’s uranium ore sample product drew the review, "I purchased this product 4.47 billion years ago and when I opened it today, it was half empty."

This article, including reviews of many other books and products, is a wonderful end-of-year read! The 59 comments are also interesting, as many discuss randomness and/or cite other sources of satirical reviews!

Submitted by Margaret Cibes

Significance magazine free to all in 2013

To celebrate the International Year of Statistics all 2013 issues of Significance magazine are FREE to read via the Significance iOS and Android Apps, and I accessed them, without my subscriber login, on my computer. Sign in here.

Some tantalizing tidbits from the February 2013 issue:

"The world's ecosystems provide services to mankind valued at $16-54 trillion per year – a value which has been described as "a serious underestimate of infinity.'"

"[T]he combined evidence does indicate him [Lance Armstrong] as a singular outlier even without considering drugs. …. [T]here was also a significant science behind his riding. …. When it is all assembled, it is improbable that Armstrong's advanced doping could explain his consecutive wins."

"We have shown that the normal distribution, combined with current information available on height of fully grown adults, is just not enough to exclude the possibility that a person with negative body height could exist."

Submitted by Margaret Cibes

The gold (acre) standard, fool's gold

- In scenes that could have come straight from a spy farce, the French journal Prescrire applied to Europe’s drug regulator for information on the diet drug rimonabant. The regulator sent back 68 pages in which virtually every sentence was blacked out.'

The gold standard in medical research is the randomized clinical trial, RCT. Unfortunately, gold has not been doing particularly well lately in the stock market and if you read Ben Goldacre’s book, Bad Pharma: How Drug Companies Mislead Doctors and Harm Patients, neither have randomized clinical trials. Goldacre is an MD and an epidemiologist as well as a terrific writer and lecturer. Bad Pharma is the most depressing book imaginable for statisticians to read because he shows how RCTs can be manipulated and cause amazing, costly damage to society.

The book is over 400 pages of carefully annotated unrelenting doom. For example, on page x of the Introduction;

Drugs are tested by the people who manufacture them, in poorly designed trials, on hopelessly small numbers of weird, unrepresentative patients, and analysed using techniques which are flawed by design, in such a way that they exaggerate the benefits of treatment. Unsurprisingly, these trials tend to produce results that favour the manufacturer. When trials throw up results that companies don’t like, they are perfectly entitled to hide them from doctors and patients, so we only ever see a distorted picture of any drug’s true effects.

MDs

can be in the pay of drug companies--often undisclosed--and the journals are too. And so are the patient groups. And finally, academic papers, which everyone thinks of as objective, are often covertly planned and written by people who work directly for the companies without disclosures. Sometimes whole academic journals are even owned outright by one drug company.

All that and we are only at page xi in the Introduction! The rest of the 400 or so pages are devoted to backing up his incendiary introductory assertions. Fascinating reading, all of it, but a short synopsis may be found here and a marvelous and witty TED talk by Goldacre here. An earlier and equally amusing TED talk by Goldacre about similar subject matter may be found here.

Discussion

1. His Chapter 4 is entitled, “Bad Trials” and contains over 50 pages. The subheadings are

- Outright fraud

- Test your treatment in freakishly perfect ‘ideal’ patients

- Test your drug against something useless

- Trials that are too short

- Trials that stop early

- Trials that stop late

- Trials that are too small

- Trials that measure uninformative outcomes

- Trials that bundle their outcomes together in odd ways

- Trials that ignore drop-outs

- Trials that change their main outcome after they’ve finished

- Dodgy subgroup analyses

- Dodgy subgroups of trials, rather than patients

- ‘Seeding Trials’

- Pretend it’s all positive regardless

Each subheading is illustrated with actual drug trials. Any one of those “Bad Trials” has frightening consequences. Pick a trial mentioned in the book and present Goldacre’s evidence.

2. He devotes 100 pages to the topic “Missing Data.” Because Tamiflu is considered at length in this chapter, compare and contrast what Goldacre writes about Tamiflu with what appears in Wikipedia about oseltamivir.

3. Another 100 pages in the book is covered under “Marketing.” In particular, note the role played by KOLs, “key opinion leaders.”

4. Goldacre’s last chapter is entitled, “Afterword: Better Data” where he pleads for “full disclosure:

We now know that this entire evidence base has been systematically distorted by the pharmaceutical industry, which has deliberately and selectively withheld the results of trials whose results it didn’t like, while publishing the ones with good results…It is not enough for companies to say that they won’t withhold data from new trials starting from now: we need the data from older trials, which they are still holding back, about the drugs we are using every day.

To the workers in the pharmaceutical industry, “I also encourage you to become a whistleblower” and “If you need any help, just ask: I will do everything I can to assist you.” Given the recent experience of whistleblowers in government employ, how realistic is Goldacre’s plea?

5. A typical RCT has a placebo arm to be compared to the treatment arm. According to Kas Thomas

The problem of rising placebo response is seen as a crisis in the pharma industry, where psych meds are runaway best-sellers, because it impedes approval of new drugs. Huge amounts of money are on the line.

Thomas further asserts

The drug companies say these failures [of the drugs to outperform the placebos] are happening not because their drugs are ineffective, but because placebos have recently become more effective in clinical trials.

In order to mitigate the effectiveness of placebos, drug manufacturers have devised SPCD, The Sequential Parallel Comparison Design:

SPCD is a cascading (multi-phase) protocol design. In the canonical two-phase version, you start with a larger-than-usual group of placebo subjects relative to non-placebo subjects. In phase one, you run the trial as usual, but at the end, placebo non-responders are randomized into a second phase of the study (which, like the first phase, uses a placebo control arm and a study arm).

Below is an illustration of how SPCD works and its possible benefits:

Thomas is very suspicious of the procedure:

This bit of chicanery (I don't know what else to call it) seems pointless until you do the math…basically, if you optimize the ratio of placebo to study-arm subjects properly, you end up increasing the overall power of the study while keeping placebo response minimized. This translates to big bucks for pharma companies, who strive mightily to keep the cost of drug trials down by enrolling only as many subjects as might be needed to give the study the desired power. In other words, maximizing study power per enrollee is key. And SPCD does that.

The whole idea that placebo effect is getting in the way of producing meaningful results is repugnant, I think, to anyone with scientific training.What's even more repugnant, however, is that…[it] didn't stop with a mere paper in Psychotherapy and Psychosomatics…[the creators of SPCD] …went on to apply for, and obtain, U.S. patents on SPCD (on behalf of The General Hospital Corporation of Boston). The relevant U.S. patent numbers are 7,647,235; 7,840,419; 7,983,936; 8,145,504; 8,145,505, and 8,219,41, the most recent of which was granted July 2012. You can look them up on Google Patents.

The patents begin with the statement: "A method and system for performing a clinical trial having a reduced placebo effect is disclosed." Incredibly, the whole point of the invention is to mitigate (if not actually defeat) the placebo effect. I don't know if anybody else sees this as disturbing. To me it's repulsive.

The well-known statistician, Stephen Senn, made several comments at Thomas’ blog:

The statisticians involved, at least, ought to know better. 1.They are assuming that placebo response is genuine and not just regression to the mean (the latter is usually grossly underestimated). 2. they are assuming that it is reproducible and not transient. 3. they are assuming that the FDA is asleep. If the Agency is not dozing they will make them market the drug as being only suitable for those who have a proven inability to respond to placebo. That should sort them out.

[I]f you take the point of view that the practical question is to see how a drug improves the situation beyond what would normally happen then it seems appropriate to have as a control what would normally happen. What is labelled placebo response is in any case in most cases just regression to the mean. I regard all this placebo response stuff as just so much junk science.

In Stage 1 of the above figure for the ADAPT-A trial, the drug had 10 successes in 54 trials (18.5 %) while the placebo had 29 successes in 167 trials (17.4%). Ignoring the Stage 2 entirely, what do these numbers indicate about rejecting the null hypothesis (drug is equivalent to placebo)?

6. One possible easy way to make the placebo less effective is to tell the subject that he is receiving a worthless sugar pill rather than real medication. Why is this inexpensive, straightforward procedure not utilized?

Submitted by Paul Alper