Chance News 18: Difference between revisions

Simon66217 (talk | contribs) |

|||

| (140 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

==Quotation== | ==Quotation== | ||

<blockquote> | <blockquote>Single 40-year-old women have a better chance of being killed by a terrorist than getting married.</blockquote> | ||

<div align="right" > | <div align="right" >[http://msnbc.msn.com/id/12940202/site/newsweek/ Too Late for Prince Charming]<br>Newsweek, June 2, 1986</div> | ||

See: [http://chance.dartmouth.edu/chancewiki/index.php/Chance_News_18#Newsweek_says_they_were_wrong Newsweek says they were wrong] | |||

==Forsooths== | ==Forsooths== | ||

These Forsooths are from the June 2006 ''RSS News''. | |||

<blockquote> This summer there's about a 50 per cent probability that there will be above normal temperatures for much of Britain and Europe.<br> | |||

<div align=right>''The Times''<br> | |||

5 March 2004 | |||

<blockquote> | |||

<br> | |||

<div align= | |||

</div></blockquote> | </div></blockquote> | ||

---- | ---- | ||

<blockquote> | <blockquote> To convert kilometres to miles multiply by .6214; kilometres/hour to miles/hour multiply by .6117<br> | ||

<div align= | <div align=right>''Schott's Almanac'', page 193, Table of Conversions. | ||

</div></blockquote> | </div></blockquote> | ||

---- | ---- | ||

<blockquote | <blockquote> | ||

The BBC remains just ahead of commercial radio in the UK, with a 67% share of all listeners compared with 64%. | |||

<br> | <br> | ||

<div align="right"> | <div align="right">BBC news website<br> | ||

2 February 2006 | |||

</div> | </div> | ||

---- | ---- | ||

==Statz Rappers== | |||

[http://video.google.com/videoplay?docid=489221653835413043 A statistics class at the University of Oregon had an imaginative graduate teaching assistant.] | |||

==How to Lie with Statistics Turns Fifty== | |||

"How to Lie with Statistics Turns Fifty"<br> | |||

[http://www.imstat.org/sts/issue_20_3.html Special Section: ''Statistical Science'', Vol. 20. No 3, August 2005] | |||

''The College Mathematics Journal'' (CMJ) has a column called "Media Highlights" which covers mathematics generally and its reviews often involve probability or statistical concepts. In the May 2006 issue of CMJ, Norton Starr reviews this special section of ''Statistical Science'' that recognizes the 50th birthday of Darrell Huff's famous book "How to Lie with Statistics" by asking several authors to contribute articles for this birthday party. These articles are: | |||

"Darrell Huff and Fifty Years of How to Lie with Statistics", Michael Steele. | "Darrell Huff and Fifty Years of How to Lie with Statistics", Michael Steele. | ||

| Line 50: | Line 46: | ||

"Lying with Maps", Mark Monmonier. | "Lying with Maps", Mark Monmonier. | ||

"How to Confuse with Statistics or: The Use and Misuse of Conditional Probabilities", Walter | "How to Confuse with Statistics or: The Use and Misuse of Conditional Probabilities", Walter Kremer and Gerd Gigerenzer. | ||

"How to Lie with Bad Data", Richard D. De Veaux and David J. Hand. | "How to Lie with Bad Data", Richard D. De Veaux and David J. Hand. | ||

| Line 60: | Line 56: | ||

"In Search of the Magic Lasso: The Truth About the Polygraph", Stephen, E. Fienberg and Paul C. Stern. | "In Search of the Magic Lasso: The Truth About the Polygraph", Stephen, E. Fienberg and Paul C. Stern. | ||

Norton gives a nice description of each of the papers | Norton gives a nice description of each of the papers including some of his own insightful comments. We will restrict ourselves to some quotes from the articles that we found particularly interesting. | ||

Michael Steeles tells us the story of the life of Darrell Huff and begins with: | Michael Steeles tells us the story of the life of Darrell Huff and begins with: | ||

| Line 69: | Line 65: | ||

the most widely read statistics book in the history | the most widely read statistics book in the history | ||

of the world. <br><br> | of the world. <br><br> | ||

There is some irony to the | There is some irony to the world's most famous statistics | ||

book having been written by a person with no | book having been written by a person with no | ||

formal training in statistics, but there is also some logic | formal training in statistics, but there is also some logic | ||

| Line 79: | Line 75: | ||

<blockquote> We all know statistical literacy is an important problem, | <blockquote> We all know statistical literacy is an important problem, | ||

but we’re not going to be able to agree on its place | but we’re not going to be able to agree on its place in the curriculum. Which means that "How to Lie with Statistics" is going to continue to be needed in the years ahead. </blockquote> | ||

in the curriculum. Which means that "How to Lie with Statistics" is going | |||

to continue to be needed in the years ahead. </blockquote> | |||

When we read the "The Bell Curve" by Richard Herrnstein and Charles Murray to review for Chance News, it seemed to us that the reviewers in the major newspapers could not have actually read the book. So we wrote a long review of the book for Chance News (Chance News 3.15, 3.16, 4.01). | When we read the "The Bell Curve" by Richard Herrnstein and Charles Murray to review for Chance News, it seemed to us that the reviewers in the major newspapers could not have actually read the book. So we wrote a long review of the book for Chance News ([http://www.dartmouth.edu/~chance/chance_news/recent_news/recent.html Chance News 3.15, 3.16, 4.01]). | ||

In his article Charles Murray explains six ways to knock down a book. He discribes these as: | In his article Charles Murray explains six ways to knock down a book. He discribes these as: | ||

| Line 89: | Line 83: | ||

<blockquote> Tough but effective strategies for making people think that the target book is an irredeemable mess, the findings are meaningless, the author is incompetent and devious and the book’s thesis is something it isn’t. </blockquote> | <blockquote> Tough but effective strategies for making people think that the target book is an irredeemable mess, the findings are meaningless, the author is incompetent and devious and the book’s thesis is something it isn’t. </blockquote> | ||

Our experience with "The Bell Curve" made us realize that we may have seen an example of his | Our experience with "The Bell Curve" made us realize that we may have seen an example of his sixth way to knock down a book which he calls "THE BIG LIE" and describes as follows: | ||

Finally, let us turn from strategies based on halftruths | <blockquote>Finally, let us turn from strategies based on halftruths | ||

and misdirection to a more ambitious approach: | and misdirection to a more ambitious approach: | ||

to borrow from Goebbels, the Big Lie. | to borrow from Goebbels, the Big Lie. | ||

| Line 103: | Line 95: | ||

(note that the discussion need not be a long one, nor is | (note that the discussion need not be a long one, nor is | ||

it required that the target book takes a strong position, | it required that the target book takes a strong position, | ||

nor need the topic be relevant to the | nor need the topic be relevant to the book's main argument). | ||

Once this condition is met, you can restate the | Once this condition is met, you can restate the | ||

book's position on this topic in a way that most people | |||

will find repugnant (e.g., women are inferior to men, | will find repugnant (e.g., women are inferior to men, | ||

blacks are inferior to whites, we | blacks are inferior to whites, we don't need to worry | ||

about the environment), and then claim that this repugnant | about the environment), and then claim that this repugnant | ||

position is what the book is about.<br><br> | position is what the book is about.<br><br> | ||

| Line 114: | Line 106: | ||

show or a syndicated columnist is unlikely to repeat | show or a syndicated columnist is unlikely to repeat | ||

a technical criticism of the book, but a nicely framed | a technical criticism of the book, but a nicely framed | ||

Big Lie can be newsworthy. And remember: | Big Lie can be newsworthy. And remember: It's not | ||

just the public who | just the public who won't read the target book. Hardly | ||

anybody in the media will read it either. If you can get | anybody in the media will read it either. If you can get | ||

your accusation into one important outlet, you can start | your accusation into one important outlet, you can start | ||

| Line 124: | Line 116: | ||

mind.</blockquote> | mind.</blockquote> | ||

Finally | Finally we agree with Norton's final remark in his review: | ||

<blockquote> The articles are both a compliment to and a complement of Huff's pathbreaking venture in writing. [http://www.imstat.org/sts/issue_20_3.html This issue of '' Statistical Science''] is destined to be a collector's item.</blockquote> | |||

Submitted by Laurie Snell | |||

==What does "unable to replicate" mean?== | |||

[http://www.bloomberg.com/apps/news?pid=10000088&sid=a1ELJy6bUuTk&refer=culture "Freakonomics" Author and HarperCollins Sued for Defamation], Kevin Orland, April 11, 2006, Bloomberg.com. | |||

John Lott is an economist who has published a book "More Guns, Less Crime" that uses a multiple linear regression model to demonstrate that crime rates go down when states pass "concealed carry" laws. Concealed carry laws allow citizens to apply for the right to legally carry a concealed gun for their own protection. The regression model controlled for a large number of possible confounding variables. The theory is that if criminals do not know which of their victims might be armed, they would be more reluctant to mug strangers. This theory is very controversial and has come under attack from gun control advocates. | |||

Steven D. Levitt and Stephen J. Dubner are economists who published a book "Freakonomics" that uses a multiple linear regression model in Chapter 4 to demonstrate that states which have a high abortion rate saw a larger drop in crime than states with a low abortion rate. The regression model controlled for a large number of possible confounding variables. The theory is that if abortion laws reduced the number of "unwanted children" fewer children would grow up in an environment of neglect and end up becoming criminals. This theory is very controversial and has come under attack from right-to-life groups. | |||

It is not too surprising that the authors of two such provocative regression models would end up in a public clash. Levitt and Dubner criticize Lott's research in their book, and Lott has responded by suing. | |||

<blockquote>Lott said in a federal lawsuit filed yesterday in Chicago that Levitt, a University of Chicago economist, defamed him when he wrote that other scholars have been unable to replicate Lott's research linking lower crime rates with the right to carry guns. The passage amounts to an allegation that Lott falsified his results, according to the suit.</blockquote> | |||

There are actually much stronger allegations about fraud concerning Lott's research. Timothy Noah, for example, published an article in Slate magazine about Lott with the title "[http://www.slate.com/id/2078084/ Another firearms scholar whose dog ate his data.]" | |||

But apparently, the allegation of failure to replicate is more serious. | |||

<blockquote>The allegation "damages Lott's reputation in the eyes of the academic community in which he works, and in the minds of the hundreds of thousands of academics, college students, graduate students, and members of the general public who read 'Freakonomics,'" Lott said in the lawsuit.</blockquote> | |||

The remedies suggested by Lott are rather harsh. | |||

<blockquote>Lott's suit asks for a halt in sales, a retraction in the next printing of the book and unspecified damages from Levitt and HarperCollins.</blockquote> | |||

Interestingly enough the suit does not mention the co-author, Stephen Dubner. | |||

===Questions=== | |||

1. What does the phrase "unable to replicate" mean to you? Does replication mean different things in economics versus medicine? Is "unable to replicate" a code phrase used to hint that the data is fraudulent? | |||

2. Why do you think that Lott sued Levitt and not Noah? | |||

3. What impact might this lawsuit have on scientific criticism? | |||

Submitted by Steve Simon | |||

==Newsweek says they were wrong== | |||

[http://msnbc.msn.com/id/13007828/site/newsweek/ Marriage by the Numbers]<br> Newsweek, June 6, 2006, | |||

society; Pg. 40<br> | |||

Daniel McGinn; With Andrew Murr, Karen Springen, Joan Raymond, Marc Bain, Alice-Azania Jarvis and Sam Register | |||

[http://msnbc.msn.com/id/12940202/site/newsweek/ Too Late for Prince Charming]<br>Newsweek, June 2, 1986, Lefestyle, Pg.58<br> | |||

Eloise Salholz, Rennee Michael, Mark Starr, Shawn Doherty, Pamela Abramson, Pat, Wingert. | |||

[http://www.latimes.com/news/opinion/commentary/la-oe-daum3jun03,0,6461972.column?coll=la-home-commentary Lies, damn lies and marriage statistics]<br> ''Los Angeles Times'', June 3, 2006 Editorial Pages Desk; Part B; Pg. 17 <br> | |||

Meghan Daum. | |||

The 1986 Newsweek article begins with: | |||

<blockquote>HIGHLIGHT:<br>A new study reports that college-educated women who are still single at the age of 35 have only a 5 percent chance of ever getting married<br> | |||

BODY:<br> | |||

Her sister had heard about it from a friend who had heard about it on "Phil Donahue" that morning. Her mother got the bad news via a radio talk show later that afternoon. So by the time Harvard graduate Carol Owens, 23, sat down to a family dinner in Boston, the discussion of the man shortage had reached a feverish pitch. With six unmarried daughters, Carol's said her mother was sounding an alarm. "You've got to get out of the house and meet someone," she insisted. "Now." </blockquote> | |||

After two more such examples the article goes on to say: | |||

<blockquote>The traumatic news came buried in an arid demographic study titled, innocently enough, "Marriage Patterns in the United States." But the dire statistics confirmed what everybody suspected all along: that many women who seem to have it all -- good looks and good jobs, advanced degrees and high salaries -- will never have mates. According to the report, white, college-educated women born in the mid-'50s who are still single at 30 have only a 20 percent chance of marrying. By the age of 35 the odds drop to 5 percent. Forty-year-olds are more likely to be killed by a terrorist: they have a minuscule 2.6 percent probability of tying the knot.</blockquote> | |||

We see that the study reported on white, college-educated women, it was clearly the sentence "Forty-year-olds are more likely to be killed by a terrorist" that made the article have such a big impact on the public. We read further: | |||

<blockquote>Within days, that study, as it came to be known, set off a profound crisis of confidence among America's growing ranks of single women. For years bright young women single-mindedly pursued their careers, assuming that when it was time for a husband they could pencil one in. They were wrong. "Everybody was talking about it and everybody was hysterical," says Bonnie Maslin, a New York therapist. "One patient told me 'I feel like my mother's finger is wagging at me, telling me I shouldn't have waited'." Those who weren't sad got mad. The study infuriated the contentedly single, who thought they were being told their lives were worthless without a man. "I'm not a little spinster who sits home Friday night and cries," says Boston contractor Lauren Aronson, 29. "I'm not married, but I still have a meaningful life with meaningful relationships."</blockquote> | |||

On the cover of the 2006 article we see:: | |||

<center><font= 5>'''20 Years Ago</font><br><font= 3>Newsweek Predicted a Single 40-Year-Old Woman <br> Had a Better Chance of Being Killed by a Terrorist <br> Than Getting Married. Why We Were Wrong'''. </font></center> | |||

From the 2006 Newsweek article we read: | |||

<blockquote> To mark the anniversary of the "Marriage Crunch" cover, NEWSWEEK located 11 of the 14 single women in the story. Among them, eight are married and three remain single. Several have children or stepchildren. None divorced. Twenty years ago Andrea Quattrocchi was a career-focused Boston hotel executive and reluctant to settle for a spouse who didn't share her fondness for sailing and sushi. Six years later she met her husband at a beachfront bar; they married when she was 36. Today she's a stay-at-home mom with three kids--and yes, the couple regularly enjoys sushi and sailing. "You can have it all today if you wait--that's what I'd tell my daughter," she says. " 'Enjoy your life when you're single, then find someone in your 30s like Mommy did'." </blockquote> | |||

The writers for Newsweek go on to say: | |||

<blockquote> The research that led to the highly touted marriage predictions began at Harvard and Yale in the mid-1980s. Three researchers--Neil Bennett, David Bloom and Patricia Craig--began exploring why so many women weren't marrying in their 20s, as most Americans traditionally had. Would these women still marry someday, or not at all? To find an answer, they used "life table" techniques, applying data from past age cohorts to predict future behavior--the same method typically used to predict mortality rates. "It's the staple [tool] of demography," says Johns Hopkins sociologist Andrew Cherlin. "They were looking at 40-year-olds and making predictions for 20-year-olds." The researchers focused on women, not men, largely because government statisticians had collected better age-of-marriage data for females as part of its studies on fertility patterns and birthrates.<br><br> | |||

Enter NEWSWEEK. We were hardly the first to make a big deal out of their findings, which began getting heavy media attention after the Associated Press wrote about the study that February. People magazine put the study on its cover in March with the headline the new look in old maids. And NEWSWEEK's story might be little remembered if it weren't for the "killed by a terrorist" line, first hastily written as a funny aside in an internal reporting memo by San Francisco correspondent Pamela Abramson. "It's true--I am responsible for the single most irresponsible line in the history of journalism, all meant in jest," jokes Abramson, now a freelance writer who, all kidding aside, remains contrite about the furor it started. In New York, writer Eloise Salholz inserted the line into the story. Editors thought it was clear the comparison was hyperbole. "It was never intended to be taken literally," says Salholz. Most readers missed the joke. </blockquote> | |||

While Newsweek admits they were wrong, one gets the impression that their real mistake was the use of terrorist in their comparison. | |||

Finally, some comments by Megham Daum from her June 3, 2006, ''Los Angeles Times'' column. | |||

<blockquote>Since at least the 1970s, we've surfed the waves of any number of media-generated declarations about what women want, what we don't want, what we're capable of and, inevitably, what it's like to figure out that we're not capable of all that stuff after all, which doesn't matter because it turns out we didn't want it anyway. <br><br> | |||

Like hem lengths, scare tactics wrought by questionably massaged statistics change with the seasons. After the difficulty of marrying came the challenge of getting pregnant later in life. The panic du jour, of course, is the apparent near-impossibility of effectively raising kids while maintaining a career. Somehow this topic registers as sexier than what's happening in, say, Iraq or Darfur. In our more myopic moments, we seem to believe that people in refugee camps aren't nearly as stressed out as your average law school grad with a Baby Bjorn.</blockquote> | |||

Well, we did not add anything to this story but sometimes it seems best to let the players speak for themselves. | |||

===Discussion questions=== | |||

(1) The article includes several graphics giving the results of studies on women and marriage. Here is one of these. Note that the first two studies were reported at about the same time. | |||

<center>Three studies tried to gauge the odds of an<br> | |||

40-year-old woman's eventually marrying.</center> | |||

<center>Bennett, Bloom & Craig<br> | |||

2.6% <br> | |||

1986 Census report<br> | |||

17%-23%<br> | |||

1996 Census report<br>40.8%</center> | |||

Do you think that "eventually marrying" is correct? See if you can find the first two studies and see if you can explain the difference in the first two outcomes. | |||

(2) Do you think that the Newsweek editors were really surprised that their readers did not recognize their joke? | |||

Submitted by Laurie Snell | Submitted by Laurie Snell | ||

==Independence of a DSMB is questioned== | |||

[http://www.npr.org/templates/story/story.php?storyId=5462419 Conflicted Safety Panel Let Vioxx Study Continue], Snigdha Prakash, June 8, 2006, National Public Radio. | |||

Vioxx is a pain reliever manufactured by Merck which has a [http://www.npr.org/templates/story/story.php?storyId=5470430 complex and controversial history.] There have been recent revelations about serious conflicts of interest in the Data Safety Monitoring Board (DSMB) for a large scale trial, the Vioxx Gastrointestinal Outcomes Research study (VIGOR). This is not the trial that resulted in Vioxx being removed from the market, but rather an earlier trial. | |||

The DSMB reviewed data in 2000 that indicated a difference in risk of cardiovasclar between Vioxx and the comparison drug, naproxen. If the VIGOR trial had been ended early because of an increased risk of heart problems, perhaps the Vioxx would have been removed from the market four years earlier, saving countless lives and avoiding a flood of lawsuits that Merck is now facing. | |||

The DSMB, however, did not stop the study early and offered several explanations. First, the DSMB | |||

<blockquote>couldn't tell if Vioxx was causing the heart problems or if naproxen, acting like low-dose aspirin, protected people from them, making Vioxx just look risky by comparison.</blockquote> | |||

This contention was disputed by several experts that NPR interviewed who pointed out that the reason for the discrepancy was irrelevant to those patients in the VIGOR trial that suffered harm as a result of their participation in the study. Also, there was no solid evidence that naproxen had a protective effect. | |||

The DSMB was also concerned about the small sample size. One of the experts disagreed with this contention also. The results were indeed statistically significant, and were consistent across all subgroups. | |||

<blockquote>Curt Furberg concedes the number of heart problems and deaths was small. But he says it's clear the results weren't due to chance. He says the patterns were the same in every population group in the study.</blockquote> | |||

<blockquote>FURBERG: In old people, young people, those who have hypertension, those who don't, etc. And the findings were very, very consistent. So in my mind, this confirms that the findings are real.</blockquote> | |||

The DSMB also did not stop the study early because the trial was almost completely over. | |||

Again, Dr. Furberg objects to this logic. | |||

<blockquote>Curt Furberg says it does take time to stop a large, multinational study, and only a few additional heart attacks or deaths could have been predicted to occur in the remaining time. But he says:</blockquote> | |||

<blockquote>FURBERG: I think we have obligations -- ethical, moral obligations. You don't want to expose patients to a harmful drug in a drug study. They should not be treated like guinea pigs. They are human beings. And we need to respect their rights. </blockquote> | |||

The DSMB also wanted the trial to continue because it was addressing a very important question. | |||

<blockquote>Vioxx could save lives, if the study showed that Vioxx caused less gastrointestinal bleeding.</blockquote> | |||

Another expert interviewed by NPR disagreed. | |||

<blockquote>But cardiologist Paul Armstrong counters such bleeding isn't common.</blockquote> | |||

<blockquote>ARMSTRONG: The frequency with which that occurs is minor, and I would say unlikely to be counterbalanced by this excess in death and cardiovascular events | |||

</blockquote> | |||

There were several conflicts of interest among members of the DSMB. The chair of the DSMB owned $73,000 in Merck stock. Shortly after the DSMB finished it's work, the chair received a consulting contract for 12 days of work at $5,000 per day. Although it probably wasn't as lucrative, another member of the DSMB particpated on the speaker bureau at Merck. | |||

Another concern raised was the presence of a Merck statistician during all deliberations of the DSMB. It is not unusual for a company statistician to present data to the DSMB, but in most situations, the statistician then removes himself/herself from any additional discussion. | |||

===Questions=== | |||

1. If there is a statistically significant difference in the risk of side effects between two arms of the study, should the DSMB stop the study? Does the reason for the discrepancy have any relevance? | |||

2. Why would consistency across a wide range of subgroups in a study strengthen the credibility of a finding. How would you interpret such a finding if was restricted to a specific subgroup? What action would be appropriate for that subgroup? | |||

3. How large a financial stake should a person have before he/she should be barred from serving on a DSMB. | |||

4. If you were serving on a DSMB, would you be troubled by the presence of a company statistician during all deliberations? | |||

5. The members of a DSMB are typically selected by the company whose drug is being studied. Is there a problem with this approach? Can you suggest an alternative method for selecting members of a DSMB? | |||

Submitted by Steve Simon | |||

==Impact Factors== | |||

[http://online.wsj.com/public/article/SB114946859930671119-eB_FW_Satwxeah21loJ7Dmcp4Rk_20070604.html?mod=rss_free Science Journals artfully try to boost their Rankings]<br> | |||

''Wall Street Journal'', June 5, 2006, B1<br> | |||

Sharon Begley | |||

It always comes as a shock to students fresh out of high school chemistry and physics classes--where data is deemed sacred--to be told that in statistics it is legitimate to remove outliers. What is beyond the pale is to add data that didn't happen. This obvious restriction is now being loosened in a strange way. According to this ''Wall Street Journal'' article, researchers submitting papers to a particular scientific journal are being pushed to augment their articles with bibliographic citations of that specific journal. "Scientists and editors say scientific journals increasingly are manipulating rankings--called 'impact factors'--that are based on how often papers they publish are cited by other researchers." | |||

Why? Because "Impact factors are essentially a grading system of how important the papers a journal publishes are." Besides inflating a journal's reputation, "Journals can [also] limit citiations to papers published by competitors, keeping their rivals' impact factors down." As always, follow the money: "Impact factors matter to publishers' bottom lines because librarians rely on them to make purchasing decisions. Annual subscriptions to some journals can cost upwards of $10,000." | |||

===Discussion=== | |||

1. In the ''Wall Street Journal'' article, several scientific journal editors | |||

deny that the impact factor plays any role in the selection of papers. | |||

Assume you are the editor, what would you tell would-be authors? What would | |||

you tell your reviewers? | |||

2. The article further states, "Scientists and publishers worry that the | |||

cult of the impact factor is skewing the direction of scientific research." | |||

Elaborate. | |||

3. A standard technique in frequentist inferential statistics is known as | |||

"p-value" which deals with data this extreme or more extreme. How does this | |||

square with the sentence "What is beyond the pale is to add data that | |||

didn't happen"? | |||

Submitted by Paul Alper | |||

==Privacy vs. Security via Bayes Theorem== | |||

We're giving up privacy and getting little in return<br> | |||

''Minneapolis Star Tribune'', May 31, 2006<br> | |||

Bruce Schneier | |||

Bayes theorem (Bayesian inversion) is customarily introduced either via the so-called Harvard Medical School fallacy or the so-called prosecutor's fallacy. The former illustrates that the Prob(Disease|Test +)--what the patient wants to know--can be quite different from Prob(Test +|Disease)--the usual information given the patient by the doctor--when the number of false positives is large compared to the number of true positives. Likewise, the latter fallacy shows that Prob(Guilty|DNA matches) can be quite different from Prob(DNA matches|Guilty). | |||

However, we now live in an era where privacy and security become the watchwords of the day, affording us an unexpected and possibly unpleasant application of Bayes theorem. Bruce Schneier, a specialist in computer security, considers how data mining by means of NSA government wiretapping of phone calls/emails to uncover terrorist plots, is essentially fruitless because of the incredibly large number of false positives in comparison to the tiny number of true positives [Minneapolis Star Tribune, May 31, 2006]. Or, as he puts it, even an "unrealistically accurate system" will be such that "the police will have to investigate 27 million potential plots in order to find the one real terrorist plot per month. Clearly ridiculous." He concludes that "By allowing the NSA to eavesdrop on us all, we're not trading privacy for security. We're giving up privacy without getting any security in return." | |||

===Discussion=== | |||

1. Schneier maintains that "Data mining works best when you're searching for a well-defined profile, a reasonable number of attacks per year, and a low cost of false alarms. Credit-card fraud is one of data mining's success stories: All credit-card companies mine their transaction databases for data for spending patterns that indicate a stolen card. Many credit-card thieves share a pattern." What pattern do credit-card thieves tend to have? What pattern, if any, is there for terrorists? Why would you react differently to a phone call from your credit-card company checking on one of your transactions as opposed to a government official questioning the web sites you visit? | |||

2. He uses the term "base rate fallacy" to describe the imbalance between false positives and true positives. Why is this term indicative of the problem? | |||

3. In the context of uncovering terrorist plots, what is meant by false negatives and true negatives? | |||

4. He claims, "It's a needle-in-a-haystack problem, and throwing more hay on the pile doesn't make that problem any easier." What do you think he means by this image? | |||

Submitted by Paul Alper | |||

==The interaction that wasn't there== | |||

[http://content.nejm.org/cgi/reprint/NEJMp068137v1.pdf Time-to-Event Analyses for Long-Term Treatments -- The APPROVe Trial.]Stephen W. Lagakos. The New England Journal of Medicine. 2006 June 26; [Epub ahead of print] | |||

Vioxx (rofecoxib), a pain relief medication in a class of drugs known as Cox-2 inhibitors, is the story that just won't go away. On June 26, 2006, the ''New England Journal of Medicine'' (NEJM) released a publication by Stephen Lagakos re-analyzing data from a pivotal trial, the Adenomatous Polyp Prevention on Vioxx (APPROVe) trial. At the same time, the Journal published two letters critical of the original publication of the APPROVe trial (Bresalier RS, Sandler RS, Quan H, et al. Cardiovascular events associated with rofecoxib in a colorectal adenoma chemoprevention trial. NEJM 2005; 352: 1092-102, not available online.), a response from the first two authors of the original study, and a correction to the original publication. All the articles are interesting, but especially the one by Dr. Lagakos, a professor of biostatistics at the Harvard School of Public Health who was hired by NEJM to produce an independent review of the APPROVe study. He comments on a particular side effect in the trial (cardiovascular events), which was of enough concern to force Merck to take Vioxx off the market temporarily. | |||

<blockquote>Assessment of the cardiovascular data raises important issues about the analysis and interpretation of a time-to-event end point in a randomized, placebo controlled trial evaluating a long term treatment. These issues include the appropriate period of follow-up for safety outcomes after the discontinuation of treatment; the purpose and implications of checking the assumption of proportional hazards, which underlies the commonly used logrank test and Cox model; and what the results of a trial examining long-term use imply about the safety of a drug if it were given for shorter periods.</blockquote> | |||

The APPROVe trial originally analyzed events during the course of treatment (up to 36 months) and any events that occurred within 14 days of discontinuation of the drug or placebo. The 14 day window after cessation of treatment is critical. If the window is too narrow, you might miss some events that were related to the treatment. On the other hand, if your window is too wide, you might include events unrelated to the treatment. These events unrelated to the treatment would presumably occur in equal numbers in both groups, diluting any effect that you might otherwise see. | |||

== | A short window is especially problematic if patients discontinue the drug for reasons related to the drug itself (the drug might be difficult to tolerate, for example). This causes a differential dropout rate and can produce some serious biases. Dr. Lagakos notes that the bias could end up going in either direction. There is indeed evidence of a differential drop-out rate, and Dr. Lagakos suggests some alternate analyses that should be considered in the face of this problem. | ||

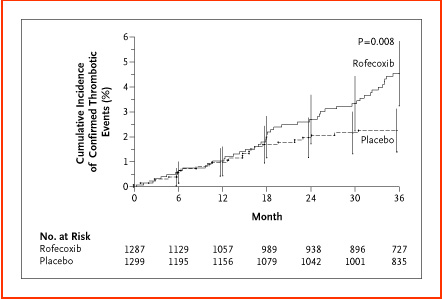

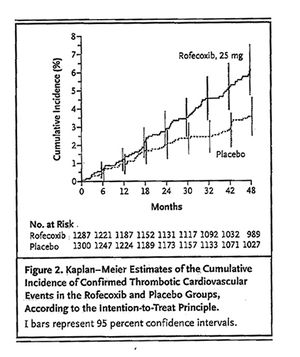

Dr. Lagakos then discusses the proportional hazards assumption. This assumption is pivotal in the proper interpretation of the hazard ratio in a Cox proportional hazards model. Two examples of deviations from proportional hazards that are especially troublesome, according to Dr. Lagakos, are two survival curves that are initially more or less identical, but which then diverge sharply at a certain time point, and two survival curves that are initially different, but which converged after a particular time point. The original analysis noted the former pattern, with the two Kaplan-Meier survival curves more or less coincident for the first 18 months, and then taking a sharp separation apart after 18 months. | |||

When you suspect a violation of proportional hazards, one approach is to model the data using time varying covariates. In particular, you can model an interaction between time and treatment or an interaction between log time and treatment. | |||

This is where things turned seriously wrong. | |||

<blockquote>The APPROVe investigators planned to use an interaction test with the logarithm of time as the primary basis for testing the proportional-hazards assumption. This test resulted in a P value of 0.07, which did not quite meet the criterion of 0.05 specified for rejecting the assumption. However, the original report of the APPROVe trial mistakenly gave the P value as 0.01, which was actually the result of an interaction test involving untransformed time. (This error is corrected in this issue of the Journal.)</blockquote> | |||

Dr. Lagakos notes that even if the test for interaction was not in error, there would still be problems. Presence of an interaction could imply several possible deviations from the proportional hazards assumption and not necessarily a deviation that represents similar risk for the first 18 months and dissimilar risk thereafter. He also points out that a graphical inspection of the Kaplan-Meier curves for violations of proportional hazards is potentially misleading. | |||

Finally, Dr. Lagakos reminds us that identical survival curves during the first 12-18 months does not, in and of itself, imply that a short term course of rofecoxib is without risk. Many exposures, such as radiation, have a latency period, and a divergence of risk at a later time point could occur even with a brief exposure that shows no change in risk during the short term. | |||

===Questions=== | |||

1. Why does the drug company (Merck) have a financial incentive to demonstrate that exposure to rofecoxib has no increase in risk during the short term, but only long term? | |||

2. This is not the only study on rofecoxib that required a clarification or retraction (see the above article, Independence of a DSMB is questioned) nor the only study of Cox-2 inhibitors that has been criticized. Are these retractions evidence that the problems with incorrect data analyses are self correcting, or is it evidence that the peer-review process is broken? | |||

Submitted by Steve Simon | |||

===Figures=== | |||

The following two figures were added by Laurie Snell. The first figure is from the authors original paper and the second from the their recent correspondance in the NEJM. In the original article the authors stated that the risk for Thrombotic Events was not apparent until after 18 months. After correcting the errors in this paper and adding additional data, they conclude that the risk is now apparent after 3 months. | |||

<center>[[Image:vioxx1.jpg]]</center> | |||

Figure 2: Kaplan–Meier Estimates of the Cumulative Incidence of Confirmed Serious Thrombotic Events. | |||

[[Image:vioxx2.jpg|center|300px|]] | |||

Latest revision as of 19:29, 3 March 2007

Quotation

Single 40-year-old women have a better chance of being killed by a terrorist than getting married.

Newsweek, June 2, 1986

See: Newsweek says they were wrong

Forsooths

These Forsooths are from the June 2006 RSS News.

This summer there's about a 50 per cent probability that there will be above normal temperatures for much of Britain and Europe.

The Times

5 March 2004

To convert kilometres to miles multiply by .6214; kilometres/hour to miles/hour multiply by .6117

Schott's Almanac, page 193, Table of Conversions.

The BBC remains just ahead of commercial radio in the UK, with a 67% share of all listeners compared with 64%.

BBC news website

2 February 2006

Statz Rappers

A statistics class at the University of Oregon had an imaginative graduate teaching assistant.

How to Lie with Statistics Turns Fifty

"How to Lie with Statistics Turns Fifty"

Special Section: Statistical Science, Vol. 20. No 3, August 2005The College Mathematics Journal (CMJ) has a column called "Media Highlights" which covers mathematics generally and its reviews often involve probability or statistical concepts. In the May 2006 issue of CMJ, Norton Starr reviews this special section of Statistical Science that recognizes the 50th birthday of Darrell Huff's famous book "How to Lie with Statistics" by asking several authors to contribute articles for this birthday party. These articles are:

"Darrell Huff and Fifty Years of How to Lie with Statistics", Michael Steele.

"Lies, Calculations and Constructions: Beyond How to Lie with Statistics", Joel Best.

"Lying with Maps", Mark Monmonier.

"How to Confuse with Statistics or: The Use and Misuse of Conditional Probabilities", Walter Kremer and Gerd Gigerenzer.

"How to Lie with Bad Data", Richard D. De Veaux and David J. Hand.

"How to Accuse the Other Guy of Lying with Statistics", Charles Murray.

"Ephedra", Sally C. Morton.

"In Search of the Magic Lasso: The Truth About the Polygraph", Stephen, E. Fienberg and Paul C. Stern.

Norton gives a nice description of each of the papers including some of his own insightful comments. We will restrict ourselves to some quotes from the articles that we found particularly interesting.

Michael Steeles tells us the story of the life of Darrell Huff and begins with:

In 1954 former Better Homes and Gardens editor

and active freelance writer Darrell Huff published a slim (142 page) volume, which over time would become the most widely read statistics book in the history of the world.

There is some irony to the world's most famous statistics book having been written by a person with no formal training in statistics, but there is also some logic to how this came to be. Huff had a thorough training for excellence in communication, and he had an exceptionalcommitment to doing things for himself.

In his article Joel Best reminds us of the failure of the "critical thinking" movement in the late 1980's and the 1990's and asks "who would teach it”. He is not very optimistic about this being done in statistics courses or in social science courses. And we were not very successful in getting people to teach our Chance course. He concludes his article with:

We all know statistical literacy is an important problem, but we’re not going to be able to agree on its place in the curriculum. Which means that "How to Lie with Statistics" is going to continue to be needed in the years ahead.

When we read the "The Bell Curve" by Richard Herrnstein and Charles Murray to review for Chance News, it seemed to us that the reviewers in the major newspapers could not have actually read the book. So we wrote a long review of the book for Chance News (Chance News 3.15, 3.16, 4.01).

In his article Charles Murray explains six ways to knock down a book. He discribes these as:

Tough but effective strategies for making people think that the target book is an irredeemable mess, the findings are meaningless, the author is incompetent and devious and the book’s thesis is something it isn’t.

Our experience with "The Bell Curve" made us realize that we may have seen an example of his sixth way to knock down a book which he calls "THE BIG LIE" and describes as follows:

Finally, let us turn from strategies based on halftruths

and misdirection to a more ambitious approach: to borrow from Goebbels, the Big Lie. The necessary and sufficient condition for a successful Big Lie is that the target book has at some point discussed a politically sensitive issue involving gender, race, class or the environment, and has treated this issue as a scientifically legitimate subject of investigation (note that the discussion need not be a long one, nor is it required that the target book takes a strong position, nor need the topic be relevant to the book's main argument). Once this condition is met, you can restate the book's position on this topic in a way that most people will find repugnant (e.g., women are inferior to men, blacks are inferior to whites, we don't need to worry about the environment), and then claim that this repugnant position is what the book is about.

What makes the Big Lie so powerful is the multiplier effect you can get from the media. A television news show or a syndicated columnist is unlikely to repeat a technical criticism of the book, but a nicely framed Big Lie can be newsworthy. And remember: It's not just the public who won't read the target book. Hardly anybody in the media will read it either. If you can get your accusation into one important outlet, you can start a chain reaction. Others will repeat your accusation, soon it will become the conventional wisdom, and no one will remember who started it. Done right, the Big Lie can forever after define the target book in the publicmind.

Finally we agree with Norton's final remark in his review:

The articles are both a compliment to and a complement of Huff's pathbreaking venture in writing. This issue of Statistical Science is destined to be a collector's item.

Submitted by Laurie Snell

What does "unable to replicate" mean?

"Freakonomics" Author and HarperCollins Sued for Defamation, Kevin Orland, April 11, 2006, Bloomberg.com.

John Lott is an economist who has published a book "More Guns, Less Crime" that uses a multiple linear regression model to demonstrate that crime rates go down when states pass "concealed carry" laws. Concealed carry laws allow citizens to apply for the right to legally carry a concealed gun for their own protection. The regression model controlled for a large number of possible confounding variables. The theory is that if criminals do not know which of their victims might be armed, they would be more reluctant to mug strangers. This theory is very controversial and has come under attack from gun control advocates.

Steven D. Levitt and Stephen J. Dubner are economists who published a book "Freakonomics" that uses a multiple linear regression model in Chapter 4 to demonstrate that states which have a high abortion rate saw a larger drop in crime than states with a low abortion rate. The regression model controlled for a large number of possible confounding variables. The theory is that if abortion laws reduced the number of "unwanted children" fewer children would grow up in an environment of neglect and end up becoming criminals. This theory is very controversial and has come under attack from right-to-life groups.

It is not too surprising that the authors of two such provocative regression models would end up in a public clash. Levitt and Dubner criticize Lott's research in their book, and Lott has responded by suing.

Lott said in a federal lawsuit filed yesterday in Chicago that Levitt, a University of Chicago economist, defamed him when he wrote that other scholars have been unable to replicate Lott's research linking lower crime rates with the right to carry guns. The passage amounts to an allegation that Lott falsified his results, according to the suit.

There are actually much stronger allegations about fraud concerning Lott's research. Timothy Noah, for example, published an article in Slate magazine about Lott with the title "Another firearms scholar whose dog ate his data."

But apparently, the allegation of failure to replicate is more serious.

The allegation "damages Lott's reputation in the eyes of the academic community in which he works, and in the minds of the hundreds of thousands of academics, college students, graduate students, and members of the general public who read 'Freakonomics,'" Lott said in the lawsuit.

The remedies suggested by Lott are rather harsh.

Lott's suit asks for a halt in sales, a retraction in the next printing of the book and unspecified damages from Levitt and HarperCollins.

Interestingly enough the suit does not mention the co-author, Stephen Dubner.

Questions

1. What does the phrase "unable to replicate" mean to you? Does replication mean different things in economics versus medicine? Is "unable to replicate" a code phrase used to hint that the data is fraudulent?

2. Why do you think that Lott sued Levitt and not Noah?

3. What impact might this lawsuit have on scientific criticism?

Submitted by Steve Simon

Newsweek says they were wrong

Marriage by the Numbers

Newsweek, June 6, 2006, society; Pg. 40

Daniel McGinn; With Andrew Murr, Karen Springen, Joan Raymond, Marc Bain, Alice-Azania Jarvis and Sam Register

Too Late for Prince Charming

Newsweek, June 2, 1986, Lefestyle, Pg.58

Eloise Salholz, Rennee Michael, Mark Starr, Shawn Doherty, Pamela Abramson, Pat, Wingert.Lies, damn lies and marriage statistics

Los Angeles Times, June 3, 2006 Editorial Pages Desk; Part B; Pg. 17

Meghan Daum.The 1986 Newsweek article begins with:

HIGHLIGHT:

A new study reports that college-educated women who are still single at the age of 35 have only a 5 percent chance of ever getting married

BODY:

Her sister had heard about it from a friend who had heard about it on "Phil Donahue" that morning. Her mother got the bad news via a radio talk show later that afternoon. So by the time Harvard graduate Carol Owens, 23, sat down to a family dinner in Boston, the discussion of the man shortage had reached a feverish pitch. With six unmarried daughters, Carol's said her mother was sounding an alarm. "You've got to get out of the house and meet someone," she insisted. "Now."

After two more such examples the article goes on to say:

The traumatic news came buried in an arid demographic study titled, innocently enough, "Marriage Patterns in the United States." But the dire statistics confirmed what everybody suspected all along: that many women who seem to have it all -- good looks and good jobs, advanced degrees and high salaries -- will never have mates. According to the report, white, college-educated women born in the mid-'50s who are still single at 30 have only a 20 percent chance of marrying. By the age of 35 the odds drop to 5 percent. Forty-year-olds are more likely to be killed by a terrorist: they have a minuscule 2.6 percent probability of tying the knot.

We see that the study reported on white, college-educated women, it was clearly the sentence "Forty-year-olds are more likely to be killed by a terrorist" that made the article have such a big impact on the public. We read further:

Within days, that study, as it came to be known, set off a profound crisis of confidence among America's growing ranks of single women. For years bright young women single-mindedly pursued their careers, assuming that when it was time for a husband they could pencil one in. They were wrong. "Everybody was talking about it and everybody was hysterical," says Bonnie Maslin, a New York therapist. "One patient told me 'I feel like my mother's finger is wagging at me, telling me I shouldn't have waited'." Those who weren't sad got mad. The study infuriated the contentedly single, who thought they were being told their lives were worthless without a man. "I'm not a little spinster who sits home Friday night and cries," says Boston contractor Lauren Aronson, 29. "I'm not married, but I still have a meaningful life with meaningful relationships."

On the cover of the 2006 article we see::

<font= 5>20 Years Ago

<font= 3>Newsweek Predicted a Single 40-Year-Old Woman

Had a Better Chance of Being Killed by a Terrorist

Than Getting Married. Why We Were Wrong.From the 2006 Newsweek article we read:

To mark the anniversary of the "Marriage Crunch" cover, NEWSWEEK located 11 of the 14 single women in the story. Among them, eight are married and three remain single. Several have children or stepchildren. None divorced. Twenty years ago Andrea Quattrocchi was a career-focused Boston hotel executive and reluctant to settle for a spouse who didn't share her fondness for sailing and sushi. Six years later she met her husband at a beachfront bar; they married when she was 36. Today she's a stay-at-home mom with three kids--and yes, the couple regularly enjoys sushi and sailing. "You can have it all today if you wait--that's what I'd tell my daughter," she says. " 'Enjoy your life when you're single, then find someone in your 30s like Mommy did'."

The writers for Newsweek go on to say:

The research that led to the highly touted marriage predictions began at Harvard and Yale in the mid-1980s. Three researchers--Neil Bennett, David Bloom and Patricia Craig--began exploring why so many women weren't marrying in their 20s, as most Americans traditionally had. Would these women still marry someday, or not at all? To find an answer, they used "life table" techniques, applying data from past age cohorts to predict future behavior--the same method typically used to predict mortality rates. "It's the staple [tool] of demography," says Johns Hopkins sociologist Andrew Cherlin. "They were looking at 40-year-olds and making predictions for 20-year-olds." The researchers focused on women, not men, largely because government statisticians had collected better age-of-marriage data for females as part of its studies on fertility patterns and birthrates.

Enter NEWSWEEK. We were hardly the first to make a big deal out of their findings, which began getting heavy media attention after the Associated Press wrote about the study that February. People magazine put the study on its cover in March with the headline the new look in old maids. And NEWSWEEK's story might be little remembered if it weren't for the "killed by a terrorist" line, first hastily written as a funny aside in an internal reporting memo by San Francisco correspondent Pamela Abramson. "It's true--I am responsible for the single most irresponsible line in the history of journalism, all meant in jest," jokes Abramson, now a freelance writer who, all kidding aside, remains contrite about the furor it started. In New York, writer Eloise Salholz inserted the line into the story. Editors thought it was clear the comparison was hyperbole. "It was never intended to be taken literally," says Salholz. Most readers missed the joke.While Newsweek admits they were wrong, one gets the impression that their real mistake was the use of terrorist in their comparison.

Finally, some comments by Megham Daum from her June 3, 2006, Los Angeles Times column.

Since at least the 1970s, we've surfed the waves of any number of media-generated declarations about what women want, what we don't want, what we're capable of and, inevitably, what it's like to figure out that we're not capable of all that stuff after all, which doesn't matter because it turns out we didn't want it anyway.

Like hem lengths, scare tactics wrought by questionably massaged statistics change with the seasons. After the difficulty of marrying came the challenge of getting pregnant later in life. The panic du jour, of course, is the apparent near-impossibility of effectively raising kids while maintaining a career. Somehow this topic registers as sexier than what's happening in, say, Iraq or Darfur. In our more myopic moments, we seem to believe that people in refugee camps aren't nearly as stressed out as your average law school grad with a Baby Bjorn.Well, we did not add anything to this story but sometimes it seems best to let the players speak for themselves.

Discussion questions

(1) The article includes several graphics giving the results of studies on women and marriage. Here is one of these. Note that the first two studies were reported at about the same time.

Three studies tried to gauge the odds of an

40-year-old woman's eventually marrying.Bennett, Bloom & Craig

2.6%

1996 Census report

1986 Census report

17%-23%

40.8%Do you think that "eventually marrying" is correct? See if you can find the first two studies and see if you can explain the difference in the first two outcomes.

(2) Do you think that the Newsweek editors were really surprised that their readers did not recognize their joke?

Submitted by Laurie Snell

Independence of a DSMB is questioned

Conflicted Safety Panel Let Vioxx Study Continue, Snigdha Prakash, June 8, 2006, National Public Radio.

Vioxx is a pain reliever manufactured by Merck which has a complex and controversial history. There have been recent revelations about serious conflicts of interest in the Data Safety Monitoring Board (DSMB) for a large scale trial, the Vioxx Gastrointestinal Outcomes Research study (VIGOR). This is not the trial that resulted in Vioxx being removed from the market, but rather an earlier trial.

The DSMB reviewed data in 2000 that indicated a difference in risk of cardiovasclar between Vioxx and the comparison drug, naproxen. If the VIGOR trial had been ended early because of an increased risk of heart problems, perhaps the Vioxx would have been removed from the market four years earlier, saving countless lives and avoiding a flood of lawsuits that Merck is now facing.

The DSMB, however, did not stop the study early and offered several explanations. First, the DSMB

couldn't tell if Vioxx was causing the heart problems or if naproxen, acting like low-dose aspirin, protected people from them, making Vioxx just look risky by comparison.

This contention was disputed by several experts that NPR interviewed who pointed out that the reason for the discrepancy was irrelevant to those patients in the VIGOR trial that suffered harm as a result of their participation in the study. Also, there was no solid evidence that naproxen had a protective effect.

The DSMB was also concerned about the small sample size. One of the experts disagreed with this contention also. The results were indeed statistically significant, and were consistent across all subgroups.

Curt Furberg concedes the number of heart problems and deaths was small. But he says it's clear the results weren't due to chance. He says the patterns were the same in every population group in the study.

FURBERG: In old people, young people, those who have hypertension, those who don't, etc. And the findings were very, very consistent. So in my mind, this confirms that the findings are real.

The DSMB also did not stop the study early because the trial was almost completely over.

Again, Dr. Furberg objects to this logic.

Curt Furberg says it does take time to stop a large, multinational study, and only a few additional heart attacks or deaths could have been predicted to occur in the remaining time. But he says:

FURBERG: I think we have obligations -- ethical, moral obligations. You don't want to expose patients to a harmful drug in a drug study. They should not be treated like guinea pigs. They are human beings. And we need to respect their rights.

The DSMB also wanted the trial to continue because it was addressing a very important question.

Vioxx could save lives, if the study showed that Vioxx caused less gastrointestinal bleeding.

Another expert interviewed by NPR disagreed.

But cardiologist Paul Armstrong counters such bleeding isn't common.

ARMSTRONG: The frequency with which that occurs is minor, and I would say unlikely to be counterbalanced by this excess in death and cardiovascular events

There were several conflicts of interest among members of the DSMB. The chair of the DSMB owned $73,000 in Merck stock. Shortly after the DSMB finished it's work, the chair received a consulting contract for 12 days of work at $5,000 per day. Although it probably wasn't as lucrative, another member of the DSMB particpated on the speaker bureau at Merck.

Another concern raised was the presence of a Merck statistician during all deliberations of the DSMB. It is not unusual for a company statistician to present data to the DSMB, but in most situations, the statistician then removes himself/herself from any additional discussion.

Questions

1. If there is a statistically significant difference in the risk of side effects between two arms of the study, should the DSMB stop the study? Does the reason for the discrepancy have any relevance?

2. Why would consistency across a wide range of subgroups in a study strengthen the credibility of a finding. How would you interpret such a finding if was restricted to a specific subgroup? What action would be appropriate for that subgroup?

3. How large a financial stake should a person have before he/she should be barred from serving on a DSMB.

4. If you were serving on a DSMB, would you be troubled by the presence of a company statistician during all deliberations?

5. The members of a DSMB are typically selected by the company whose drug is being studied. Is there a problem with this approach? Can you suggest an alternative method for selecting members of a DSMB?

Submitted by Steve Simon

Impact Factors

Science Journals artfully try to boost their Rankings

Wall Street Journal, June 5, 2006, B1

Sharon BegleyIt always comes as a shock to students fresh out of high school chemistry and physics classes--where data is deemed sacred--to be told that in statistics it is legitimate to remove outliers. What is beyond the pale is to add data that didn't happen. This obvious restriction is now being loosened in a strange way. According to this Wall Street Journal article, researchers submitting papers to a particular scientific journal are being pushed to augment their articles with bibliographic citations of that specific journal. "Scientists and editors say scientific journals increasingly are manipulating rankings--called 'impact factors'--that are based on how often papers they publish are cited by other researchers."

Why? Because "Impact factors are essentially a grading system of how important the papers a journal publishes are." Besides inflating a journal's reputation, "Journals can [also] limit citiations to papers published by competitors, keeping their rivals' impact factors down." As always, follow the money: "Impact factors matter to publishers' bottom lines because librarians rely on them to make purchasing decisions. Annual subscriptions to some journals can cost upwards of $10,000."

Discussion

1. In the Wall Street Journal article, several scientific journal editors deny that the impact factor plays any role in the selection of papers. Assume you are the editor, what would you tell would-be authors? What would you tell your reviewers?

2. The article further states, "Scientists and publishers worry that the cult of the impact factor is skewing the direction of scientific research." Elaborate.

3. A standard technique in frequentist inferential statistics is known as "p-value" which deals with data this extreme or more extreme. How does this square with the sentence "What is beyond the pale is to add data that didn't happen"?

Submitted by Paul Alper

Privacy vs. Security via Bayes Theorem

We're giving up privacy and getting little in return

Minneapolis Star Tribune, May 31, 2006

Bruce SchneierBayes theorem (Bayesian inversion) is customarily introduced either via the so-called Harvard Medical School fallacy or the so-called prosecutor's fallacy. The former illustrates that the Prob(Disease|Test +)--what the patient wants to know--can be quite different from Prob(Test +|Disease)--the usual information given the patient by the doctor--when the number of false positives is large compared to the number of true positives. Likewise, the latter fallacy shows that Prob(Guilty|DNA matches) can be quite different from Prob(DNA matches|Guilty).

However, we now live in an era where privacy and security become the watchwords of the day, affording us an unexpected and possibly unpleasant application of Bayes theorem. Bruce Schneier, a specialist in computer security, considers how data mining by means of NSA government wiretapping of phone calls/emails to uncover terrorist plots, is essentially fruitless because of the incredibly large number of false positives in comparison to the tiny number of true positives [Minneapolis Star Tribune, May 31, 2006]. Or, as he puts it, even an "unrealistically accurate system" will be such that "the police will have to investigate 27 million potential plots in order to find the one real terrorist plot per month. Clearly ridiculous." He concludes that "By allowing the NSA to eavesdrop on us all, we're not trading privacy for security. We're giving up privacy without getting any security in return."

Discussion

1. Schneier maintains that "Data mining works best when you're searching for a well-defined profile, a reasonable number of attacks per year, and a low cost of false alarms. Credit-card fraud is one of data mining's success stories: All credit-card companies mine their transaction databases for data for spending patterns that indicate a stolen card. Many credit-card thieves share a pattern." What pattern do credit-card thieves tend to have? What pattern, if any, is there for terrorists? Why would you react differently to a phone call from your credit-card company checking on one of your transactions as opposed to a government official questioning the web sites you visit?

2. He uses the term "base rate fallacy" to describe the imbalance between false positives and true positives. Why is this term indicative of the problem?

3. In the context of uncovering terrorist plots, what is meant by false negatives and true negatives?

4. He claims, "It's a needle-in-a-haystack problem, and throwing more hay on the pile doesn't make that problem any easier." What do you think he means by this image?

Submitted by Paul AlperThe interaction that wasn't there

Time-to-Event Analyses for Long-Term Treatments -- The APPROVe Trial.Stephen W. Lagakos. The New England Journal of Medicine. 2006 June 26; [Epub ahead of print]

Vioxx (rofecoxib), a pain relief medication in a class of drugs known as Cox-2 inhibitors, is the story that just won't go away. On June 26, 2006, the New England Journal of Medicine (NEJM) released a publication by Stephen Lagakos re-analyzing data from a pivotal trial, the Adenomatous Polyp Prevention on Vioxx (APPROVe) trial. At the same time, the Journal published two letters critical of the original publication of the APPROVe trial (Bresalier RS, Sandler RS, Quan H, et al. Cardiovascular events associated with rofecoxib in a colorectal adenoma chemoprevention trial. NEJM 2005; 352: 1092-102, not available online.), a response from the first two authors of the original study, and a correction to the original publication. All the articles are interesting, but especially the one by Dr. Lagakos, a professor of biostatistics at the Harvard School of Public Health who was hired by NEJM to produce an independent review of the APPROVe study. He comments on a particular side effect in the trial (cardiovascular events), which was of enough concern to force Merck to take Vioxx off the market temporarily.

Assessment of the cardiovascular data raises important issues about the analysis and interpretation of a time-to-event end point in a randomized, placebo controlled trial evaluating a long term treatment. These issues include the appropriate period of follow-up for safety outcomes after the discontinuation of treatment; the purpose and implications of checking the assumption of proportional hazards, which underlies the commonly used logrank test and Cox model; and what the results of a trial examining long-term use imply about the safety of a drug if it were given for shorter periods.

The APPROVe trial originally analyzed events during the course of treatment (up to 36 months) and any events that occurred within 14 days of discontinuation of the drug or placebo. The 14 day window after cessation of treatment is critical. If the window is too narrow, you might miss some events that were related to the treatment. On the other hand, if your window is too wide, you might include events unrelated to the treatment. These events unrelated to the treatment would presumably occur in equal numbers in both groups, diluting any effect that you might otherwise see.

A short window is especially problematic if patients discontinue the drug for reasons related to the drug itself (the drug might be difficult to tolerate, for example). This causes a differential dropout rate and can produce some serious biases. Dr. Lagakos notes that the bias could end up going in either direction. There is indeed evidence of a differential drop-out rate, and Dr. Lagakos suggests some alternate analyses that should be considered in the face of this problem.

Dr. Lagakos then discusses the proportional hazards assumption. This assumption is pivotal in the proper interpretation of the hazard ratio in a Cox proportional hazards model. Two examples of deviations from proportional hazards that are especially troublesome, according to Dr. Lagakos, are two survival curves that are initially more or less identical, but which then diverge sharply at a certain time point, and two survival curves that are initially different, but which converged after a particular time point. The original analysis noted the former pattern, with the two Kaplan-Meier survival curves more or less coincident for the first 18 months, and then taking a sharp separation apart after 18 months.

When you suspect a violation of proportional hazards, one approach is to model the data using time varying covariates. In particular, you can model an interaction between time and treatment or an interaction between log time and treatment.

This is where things turned seriously wrong.

The APPROVe investigators planned to use an interaction test with the logarithm of time as the primary basis for testing the proportional-hazards assumption. This test resulted in a P value of 0.07, which did not quite meet the criterion of 0.05 specified for rejecting the assumption. However, the original report of the APPROVe trial mistakenly gave the P value as 0.01, which was actually the result of an interaction test involving untransformed time. (This error is corrected in this issue of the Journal.)

Dr. Lagakos notes that even if the test for interaction was not in error, there would still be problems. Presence of an interaction could imply several possible deviations from the proportional hazards assumption and not necessarily a deviation that represents similar risk for the first 18 months and dissimilar risk thereafter. He also points out that a graphical inspection of the Kaplan-Meier curves for violations of proportional hazards is potentially misleading.

Finally, Dr. Lagakos reminds us that identical survival curves during the first 12-18 months does not, in and of itself, imply that a short term course of rofecoxib is without risk. Many exposures, such as radiation, have a latency period, and a divergence of risk at a later time point could occur even with a brief exposure that shows no change in risk during the short term.

Questions

1. Why does the drug company (Merck) have a financial incentive to demonstrate that exposure to rofecoxib has no increase in risk during the short term, but only long term?

2. This is not the only study on rofecoxib that required a clarification or retraction (see the above article, Independence of a DSMB is questioned) nor the only study of Cox-2 inhibitors that has been criticized. Are these retractions evidence that the problems with incorrect data analyses are self correcting, or is it evidence that the peer-review process is broken?

Submitted by Steve Simon

Figures

The following two figures were added by Laurie Snell. The first figure is from the authors original paper and the second from the their recent correspondance in the NEJM. In the original article the authors stated that the risk for Thrombotic Events was not apparent until after 18 months. After correcting the errors in this paper and adding additional data, they conclude that the risk is now apparent after 3 months.

Figure 2: Kaplan–Meier Estimates of the Cumulative Incidence of Confirmed Serious Thrombotic Events.